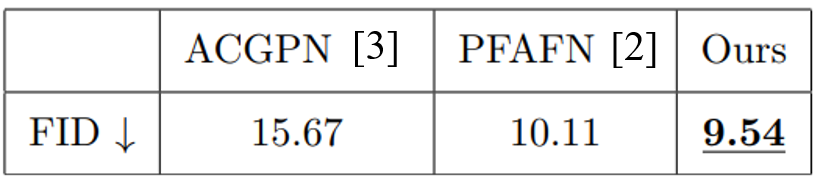

System Flowchart

A Virtual Try-On System with Skeleton-Retargeted Pose Transfer and Cloth Data Shaping

[基於骨架重定向姿勢轉移與服裝數據整形的虛擬試衣系統]

ABSTRACT:

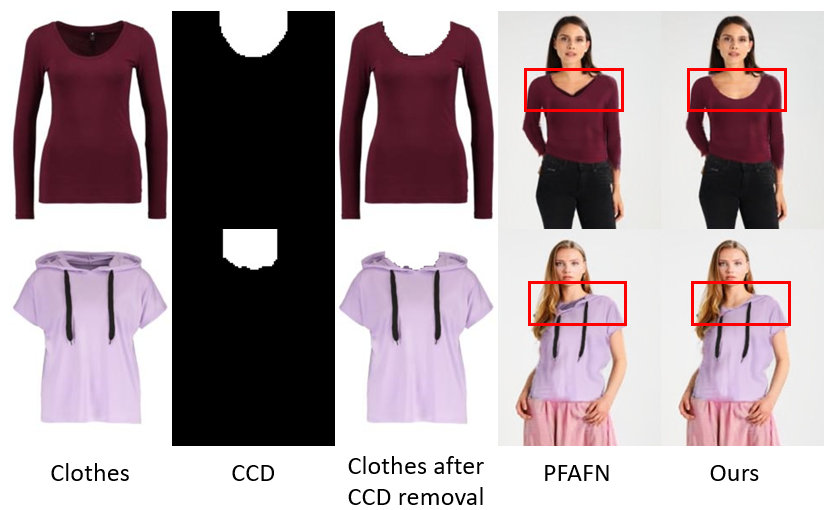

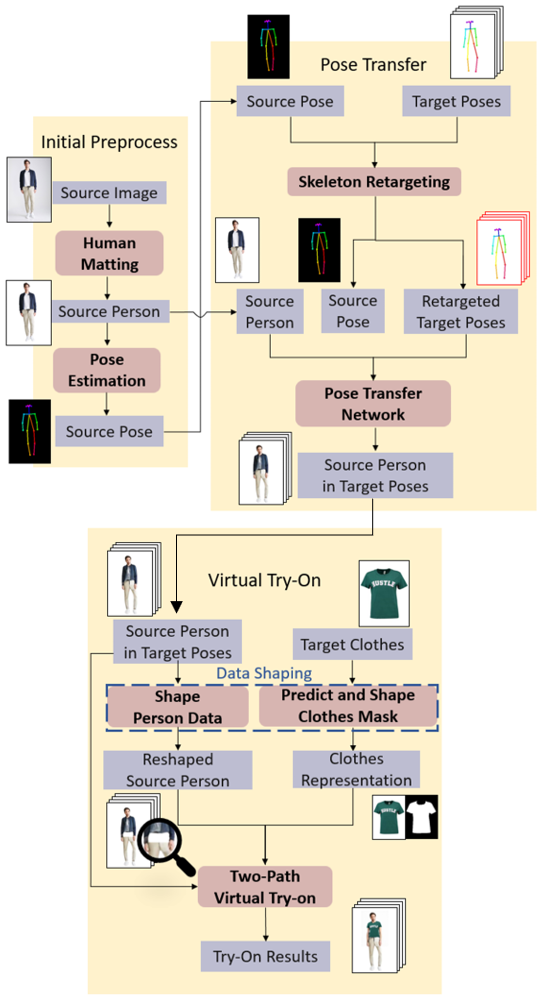

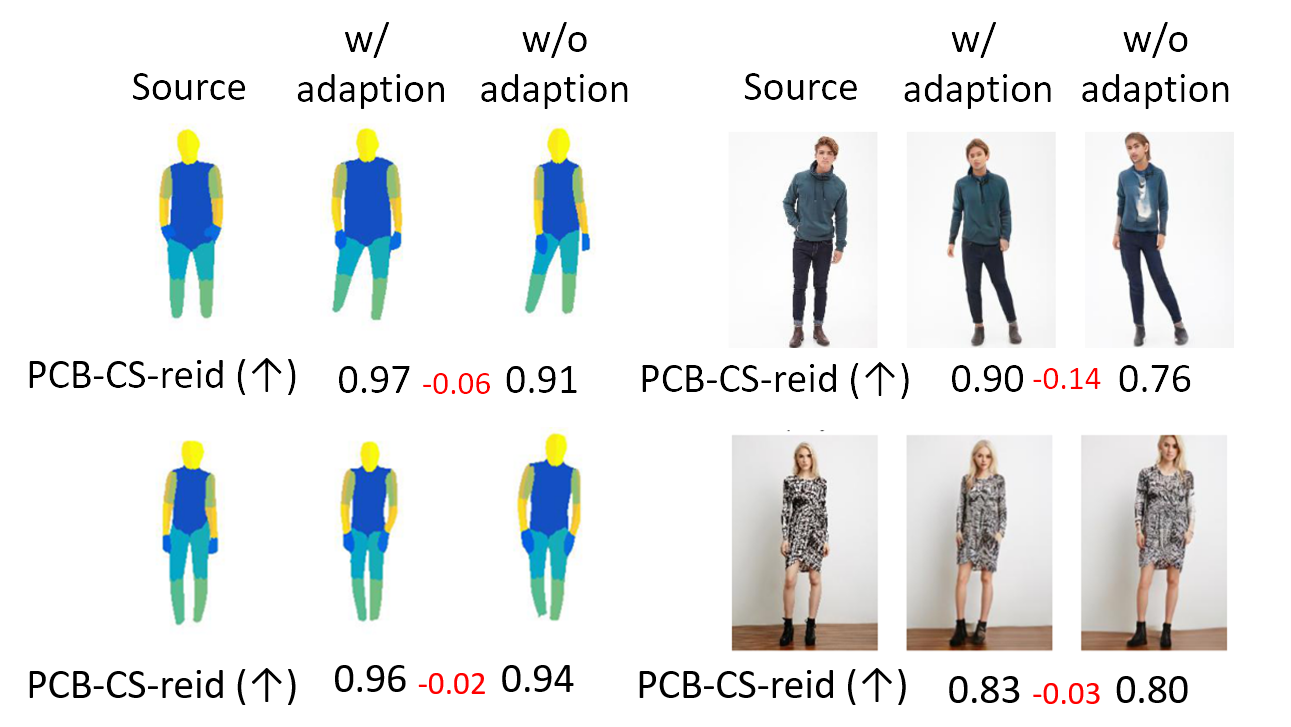

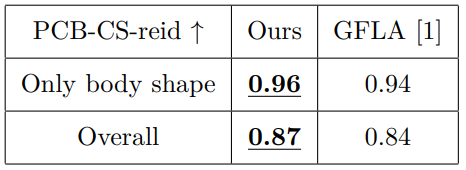

With the growing popularity of e-commerce platforms, more people prefer purchasing clothing online. While convenient, this approach increases the risk of dissatisfaction due to the inability to try on clothes before purchasing. To address this issue, we developed a system that integrates pose transfer and virtual try-on techniques, enabling users to virtually try on various clothes and showcase try-on effects through professional poses. However, traditional pose transfer methods often overlook differences in skeleton proportions, which leads to incorrect body shapes after pose transfer. In virtual try-on technique, previous approaches inadequately process clothes masks, resulting in inner linings around the collar being misapplied to the body; also, specific defects caused by input image characteristics reduce the quality of try-on results. To resolve these challenges, we propose two key improvements: (1) introducing skeleton retargeting based on motion retargeting technique before pose transfer to maintain skeleton proportions of users, and (2) performing data shaping on input data, including clothes masks and images of users, to eliminate factors contributing to defects. Experimental results demonstrate that our method enhances the PCB-CS-reid metric for pose transfer by 0.03 and improves the Frechet Inception Distance (FID) metric for virtual try-on by 0.57, compared to baseline methods. Qualitative evaluations further highlight significant visual improvements. These findings validate that our approach generates more reasonable and realistic images, producing a sequence of try-on images that enhance the online shopping experience.

SUMMARY (中文總結):

隨著電商平台的普及,越來越多人傾向於線上購買衣物,雖然方便,但因無法試穿而增加了購買風險。為了解決此問題,我們開發了一個系統,結合姿態轉移與虛擬試穿技術, 不僅能讓使用者虛擬試穿各種服飾,還能以模特兒的姿態展示穿搭效果。然而,傳統姿態轉移技術往往忽略人體骨架比例的差異,導致姿態轉換後身形失真。 此外,先前的虛擬試穿技術未對衣服遮罩進行充分處理,容易導致領口處的衣服內裡一併貼至人體;或因輸入人像特性產生特定瑕疵。針對上述問題,我們提出兩項改進: 一是在姿態轉移前加入基於動作重定向的骨架調整,以維持使用者的身材比例;二是對輸入資料 (如衣物遮罩與人像) 進行資料整形,以消除導致瑕疵出現的潛在因素。實驗結果顯示, 我們的方法在姿態轉移的PCB-CS-reid指標上提升了0.03,在虛擬試穿的Frechet Inception Distance (FID) 指標上改善了0.57,並在定性評估中展現了顯著的視覺改善。 實驗證明,我們的方法能生成更加合理且真實的影像,並產生提升網購體驗的一系列試穿照片。

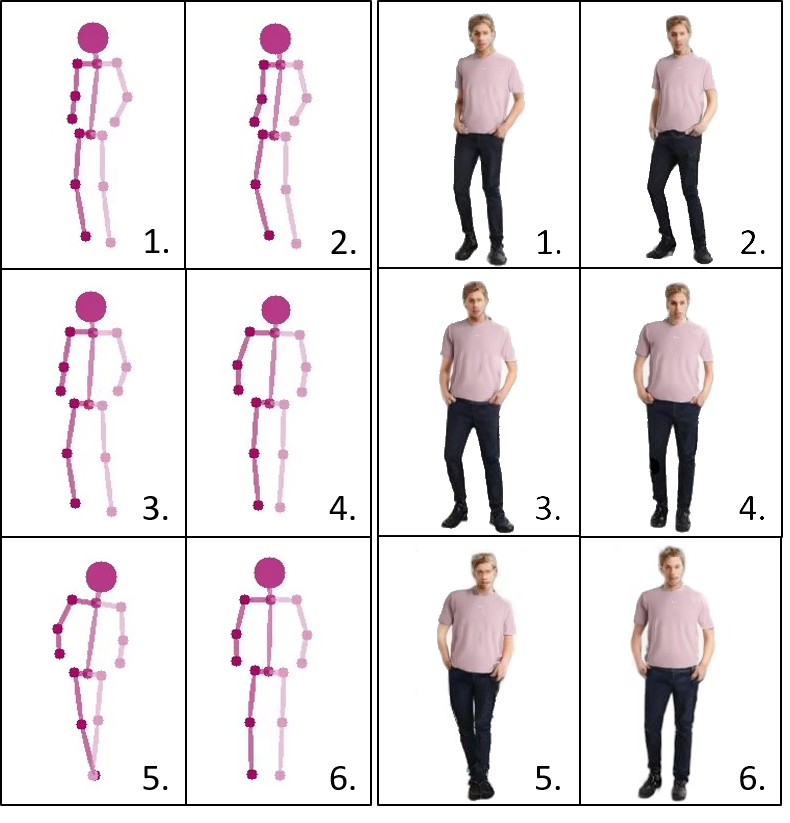

RESULTS:

| Demo | |

|---|---|

| |

| A sequence of target poses and try-on images | |

| |

REFERENCE:

[1] Yurui Ren, Xiaoming Yu, Junming Chen, Thomas H Li, and Ge Li. Deep image spatial transformation for person image generation. arXiv preprint arXiv:2003.00696, 2020.

[2] Yuying Ge, Yibing Song, Ruimao Zhang, Chongjian Ge, Wei Liu, and Ping Luo. Parser-free virtual try-on via distilling appearance flows. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 8481–8489, 2021.

[3] Han Yang, Ruimao Zhang, Xiaobao Guo, Wei Liu, Wangmeng Zuo, and Ping Luo. Towards photo-realistic virtual try-on by adaptively generating preserving image content. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 7847–7856, 2020.

[4] Kfir Aberman, Rundi Wu, Dani Lischinski, Baoquan Chen, and Daniel Cohen-Or. Learning character-agnostic motion for motion retargeting in 2d. ACM Transactions on Graphics, 38(4):1–14, 2019.

Skeleton-Retargeted Pose Transfer

Virtual Try-On with Data Shaping