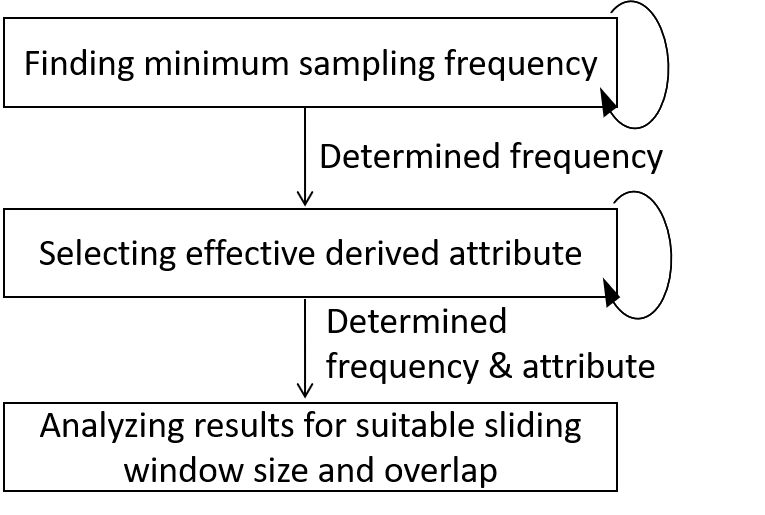

Flowchart for deciding suitable shaping parameter.

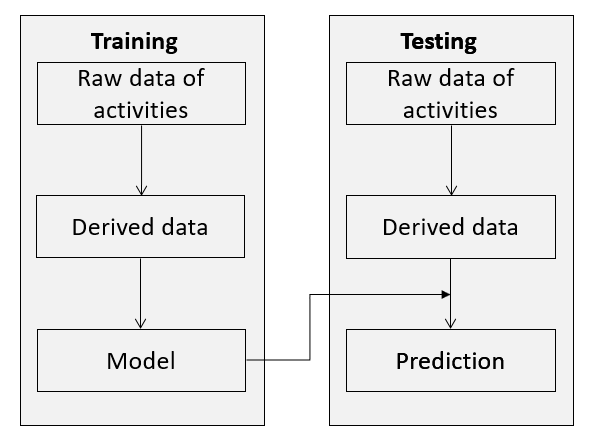

Flowchart for training and testing derived data.

[基於卷積神經網路之動作辨識數據的塑形技術]

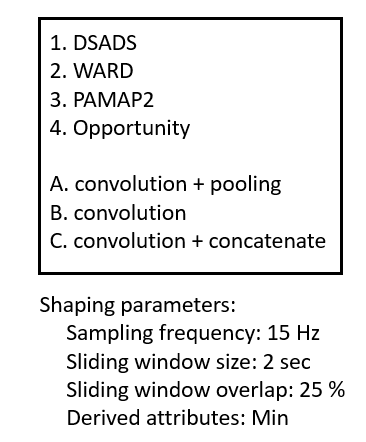

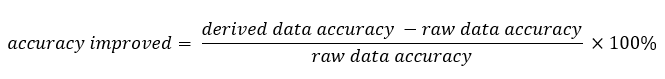

Under the current trend, whenever it comes to recognition, one will intuitively think of neural networks. In the training state of neural networks has been considering the larger the amount of data, the better. However, when data size grows, if without a filtering process, it may contain redundant data and extract repetitive features. Therefore, our goal here is to find efficient input data, rather than simply input more data. By doing so, we obtained higher recognition accuracy. Considering efficient input attributes for activity recognition, we proposed a data preprocessing process, namely data shaping which contained 4 parameters, sampling frequency, sliding window size, sliding window overlap rate and derived attributes respectively. Raw data were converted into derived data through data shaping, thereby improving the representativeness of the data and improving the accuracy. We utilized DSADS, WARD, Opportunity, UCI-HAR and PAMP2 dataset for experiments, and used 3 types of convolutional neural networks for training and testing. Through our experimental results, we knew that applying our method to the raw data can actually increase the recognition accuracy.

SUMMARY (中文總結):

本篇論文,目的在於找出可以確實提升準確度的塑形參數,也因此我們的論文有兩項貢獻。第一,找出足以辨識動作的最低頻率。在訓練階段,

並非提供越高採樣頻率的資料就有能得出越多的特徵,當頻率高到一定程度時,能提供的特徵就會飽和。透過實驗,我們得知,的確存在一個

足以辨識動作的最低頻率。第二,我們提出的規格具有推廣能力,這具有非常重要的意義。這代表我們的規格可以在不同的資料集上使用。

儘管動作相同,但是資料集之間的感測器品牌、感測器數量、放置位置,肯定會有不一樣的地方。所以規格是否具有推廣能力格外重要。

我們使用四個資料集,分別是DSADS、WARD、Opportunity和PAMAP2資料集來驗證我們提出的規格具有推廣能力,而且就算在不同的CNN架構之下,

使用塑形參數依然有效。

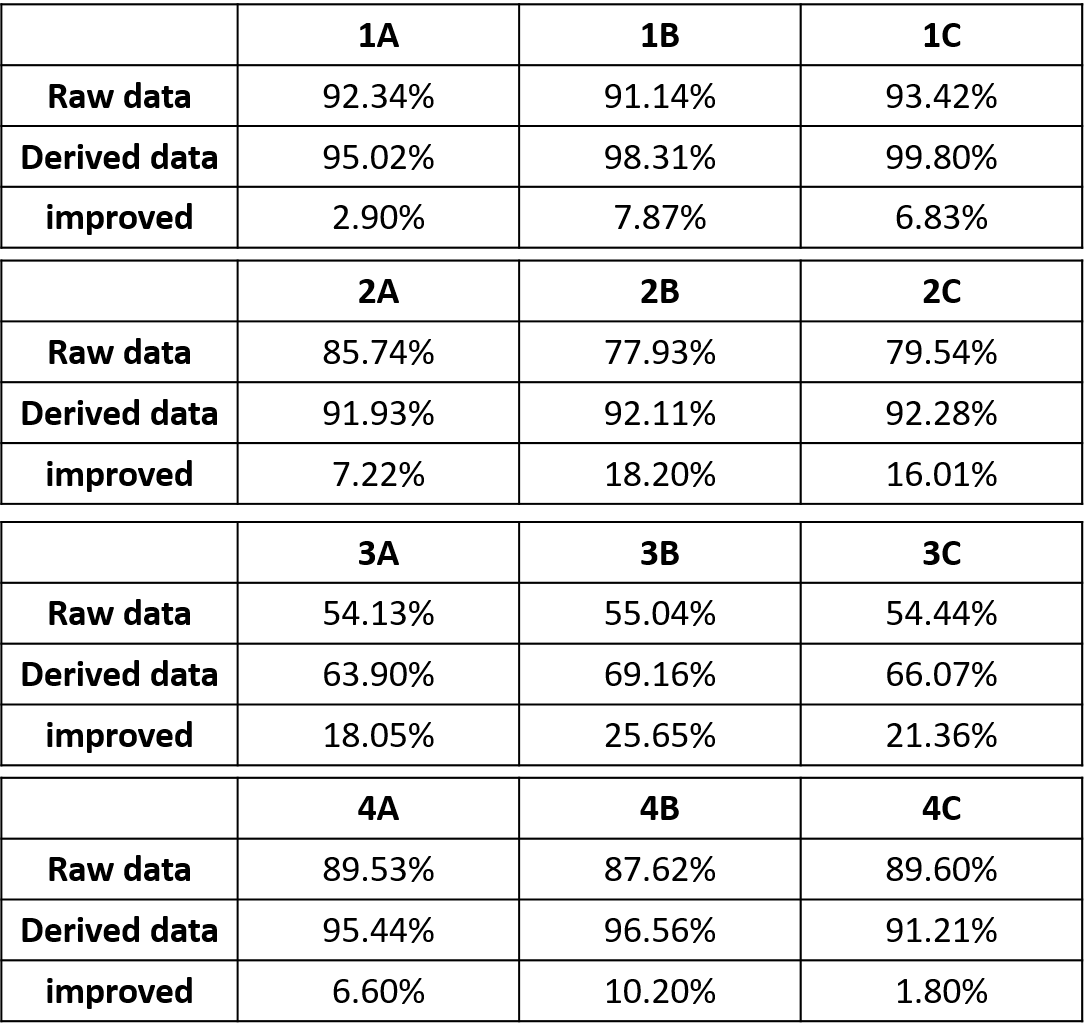

RESULTS:

Results of shpaing data in 4 datasets trained by 3 architectures.

REFERENCES:

[1] Oresti Banos, Juan-Manuel Galvez, Miguel Damas, Hector Pomares, and Ignacio Rojas. Window size impact in human activity recognition. Sensors, 14(4):6474– 6499, 2014.

[2] Kaixuan Chen, Dalin Zhang, Lina Yao, Bin Guo, Zhiwen Yu, and Yunhao Liu. Deep learning for sensor-based human activity recognition: overview, challenges and opportunities. arXiv preprint arXiv:2001.07416, 2020.

[3] Heeryon Cho and Sang Min Yoon. Divide and conquer-based 1d cnn human activity recognition using test data sharpening. Sensors, 18(4):1055, 2018.