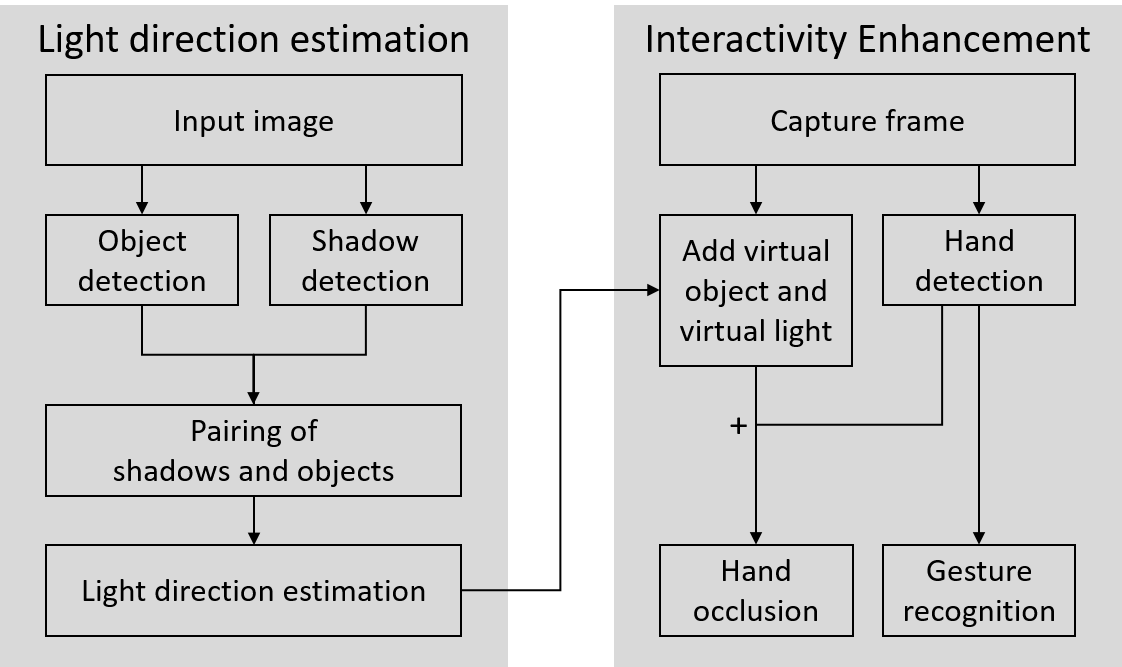

System flowchart

System flowchart

ABSTRACT:

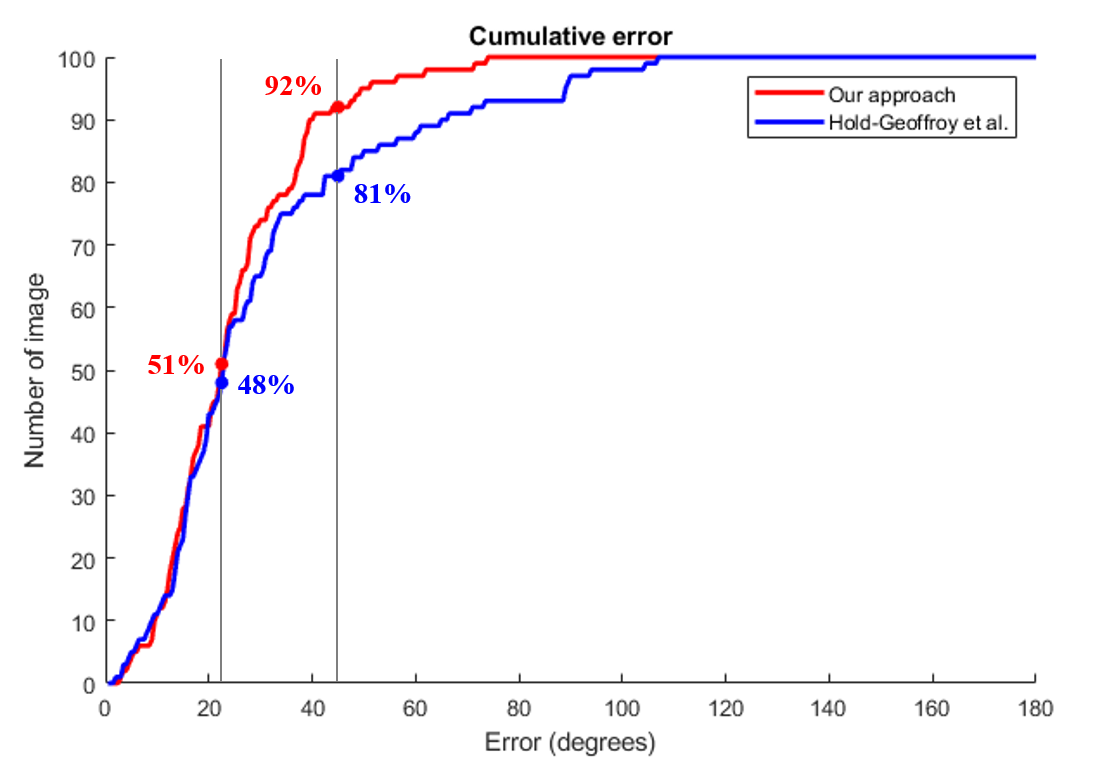

Augmented reality is a technology that combines virtual world with the real world. How to improve the realism of augmented reality is an important topic. One focus of this paper is lighting consistency between virtual and real world, and the other is interaction with virtual object using hands. Estimating lighting conditions through traditional methods often requires many prior knowledge of the scene. We propose a method that estimates the light direction based on shadows and foreground objects with only one scene image. We detect and calculate the relative direction of object and its shadow in the scene to estimate the azimuth of light, and use area size ratio of object and its shadow to estimate the elevation angle of light. We used some real scenes to test our method. However, the exact light direction of real world is difficult to acquire, so we further verified our method by establishing a number of virtual scenes with pre-set light direction. Moreover, hand gesture based human-computer interaction provides a natural and easy way for interaction. Traditional augmented reality interactions use markers or touch screens. We apply gesture recognition and hand touchable interaction in augmented reality, allowing the user’s hand to occlude the virtual object in the picture, and recognize the gesture. By adding estimated light and hand touchable interactions, we enhance the realism of augmented reality.

SUMMARY (中文總結):

擴增實境是一項能將虛擬世界結合真實世界的技術,該如何使虛擬物體完美融入真實場景中,是個相當重要的課題。本篇論文的重點之一是虛擬世界與現實世界間的照明一致性,另一個重點是透過手與虛擬物體進行互動。

本篇論文提出一個基於單張場景影像的影子與前景物件來推測真實環境光源方向的方法。我們偵測並計算物體及其影子在場景中的相對位置以估計光源方位角,並利用物體及其陰影的面積比資訊來估計光源的仰角。我們使用了一些真實場景來測試我們的方法,但是現實世界確切的光線方向難以計算,因此我們藉由建立已知光源方向的虛擬場景來進一步驗證我們方法的準確性。

基於手勢的人機互動提供自然且簡單的互動方式。傳統的擴增實境互動媒介是標記物或觸控螢幕。我們將手勢辨識與手可觸互動應用在擴增實境中,允許使用者的手直接在圖片中觸摸並遮擋虛擬物體,同時辨識手勢。透過加入光源估算和手可觸互動,我們提升了擴增實境的真實感。

PROJECT MATERIAL:

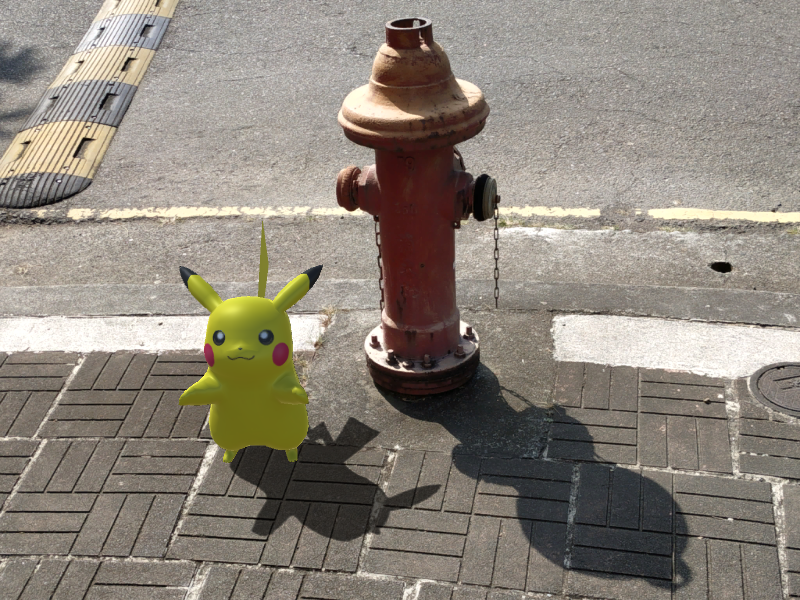

- Result

[1] Frederic Cambon. Pikachu model. https://sketchfab.com/3d-models/025-pikachu-e69fbccccf1449acb0d9328ac9bea79d, 2014.

[2] Matterport. Internet images for virtual object insertion. https://github.com/matterport/Mask_RCNN/tree/master/images, 2017.

[3] Shih-Hsiu Chang, Ching-Ya Chiu, Chia-Sheng Chang, Kuo-Wei Chen, Chih-Yuan Yao, Ruen-Rone Lee, and Hung-Kuo Chu. Generating 360 outdoor panorama dataset with reliable sun position estimation. In SIGGRAPH Asia 2018 Posters, page 22, 2018.

[4] Yannick Hold-Geoffroy, Kalyan Sunkavalli, Sunil Hadap, Emiliano Gambaretto, and Jean-Francois Lalonde. Deep outdoor illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7312–7321, 2017.

Cumulative angular error distribution comparison with Hold-Geoffroy et al. [4]

Cumulative angular error distribution comparison with Hold-Geoffroy et al. [4]